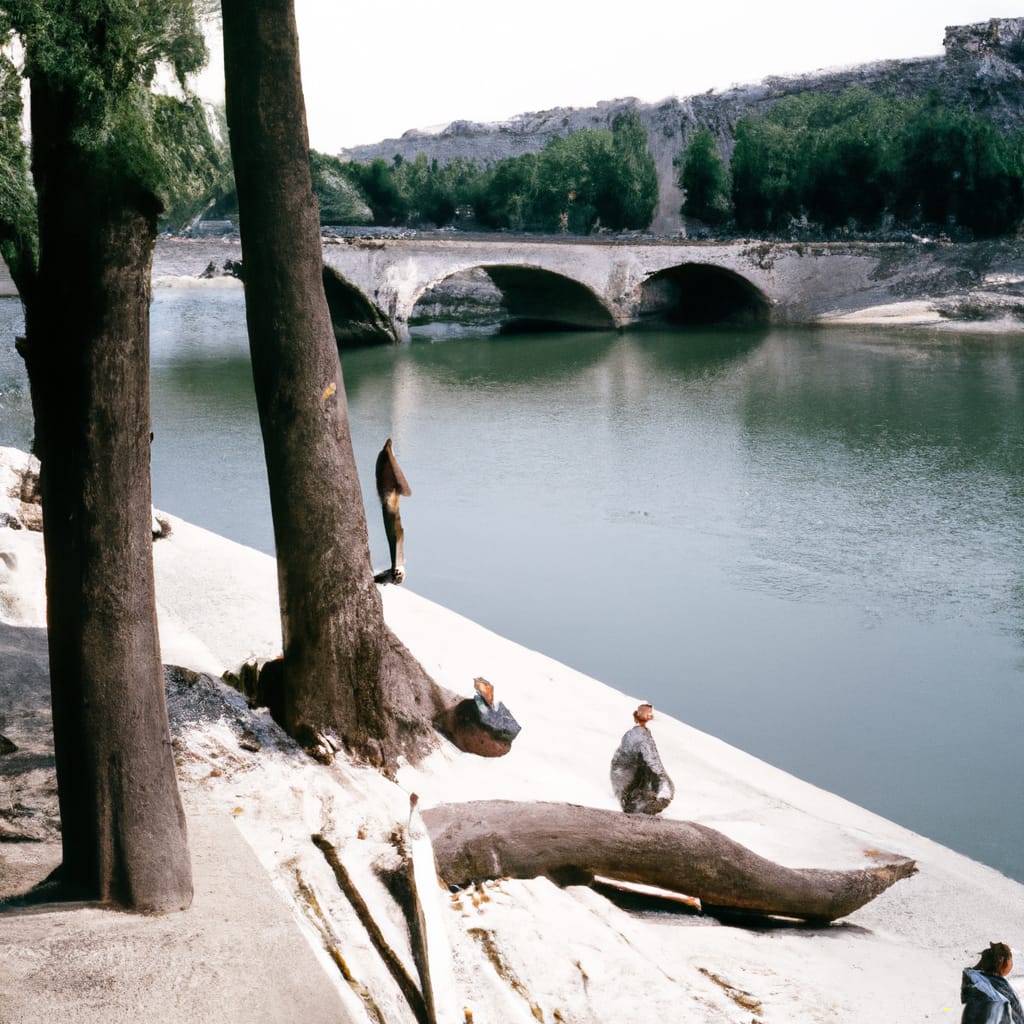

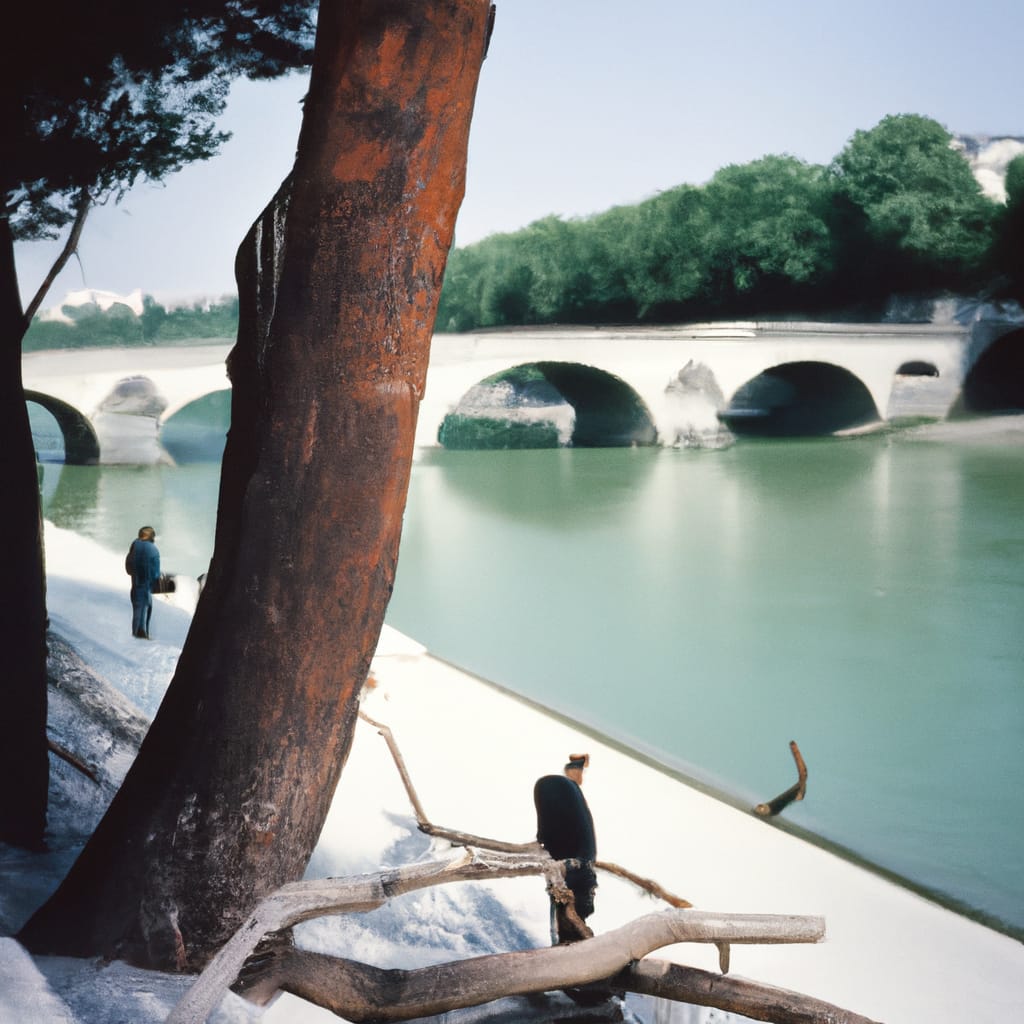

If we keep hearing that AI cannot know more than what it has been trained to know via algorithms, then most people already know that this is not true. The almost incomprehensible number of vectors, which is approaching the trillion mark, and also the almost infinite variety of possible combinations do not correspond to the idea that the system has been given concrete learning content. And yet this cannot be doubted, at least for the image generators of the AI: fed with millions and millions of images and their labels, the generated images do not get out of the model of a complex combinatorics. However, this is so complex that one cannot grasp with human consciousness all factors which have led to the respective combination. Thinking in combinations, however, the assumption of a per se eclectic modus operandi, have a direct effect on perception. Like a counterfeit coin, we hold the offer sideways against the light, as it were, and scan the surface – only we can’t bite on it yet. The result is subject to the examining gaze – at least for the time being. Then, however, an interaction can occur between the object of investigation and the investigator: no cheap copy, no dull imitation, no rigid superimposition is revealed. What emerges instead is a free-acting play of very different materials, modestly associable with sources about whose true existence we know nothing: why does the city of Paris wrap itself in the modification sequence of an old Kodachrome photograph of anglers on the Seine like Christo’s Reichstag? Why do images emerge that resemble illustrations of “Waiting for Godot”? With the dried-up rivulet in the cartoon pastel at the end, is concern about climate change already inherent in AI? You don’t have to be an AI expert to know that all these associations are ultimately nonsense. But why does our imagination not allow to deny an imaginer the imagination? Because then it is already indicated that our imaginations and the AI have no connection, but produce this in the best case only in the cooperation.