The concept of “Orientalism” is a term coined by Edward Said to describe the way in which Western cultures have historically depicted and understood the people and cultures of the Middle East and Asia. These depictions often involve stereotypes and simplifications that reinforce Western superiority and exoticize the “Orient.”

While AI generators themselves do not hold cultural biases or preconceptions, they are only as unbiased as the data they are trained on. If the training data for an AI image generator includes primarily images of the Middle East that have been produced through an Orientalist lens, then the output of the generator may also reflect these biases.

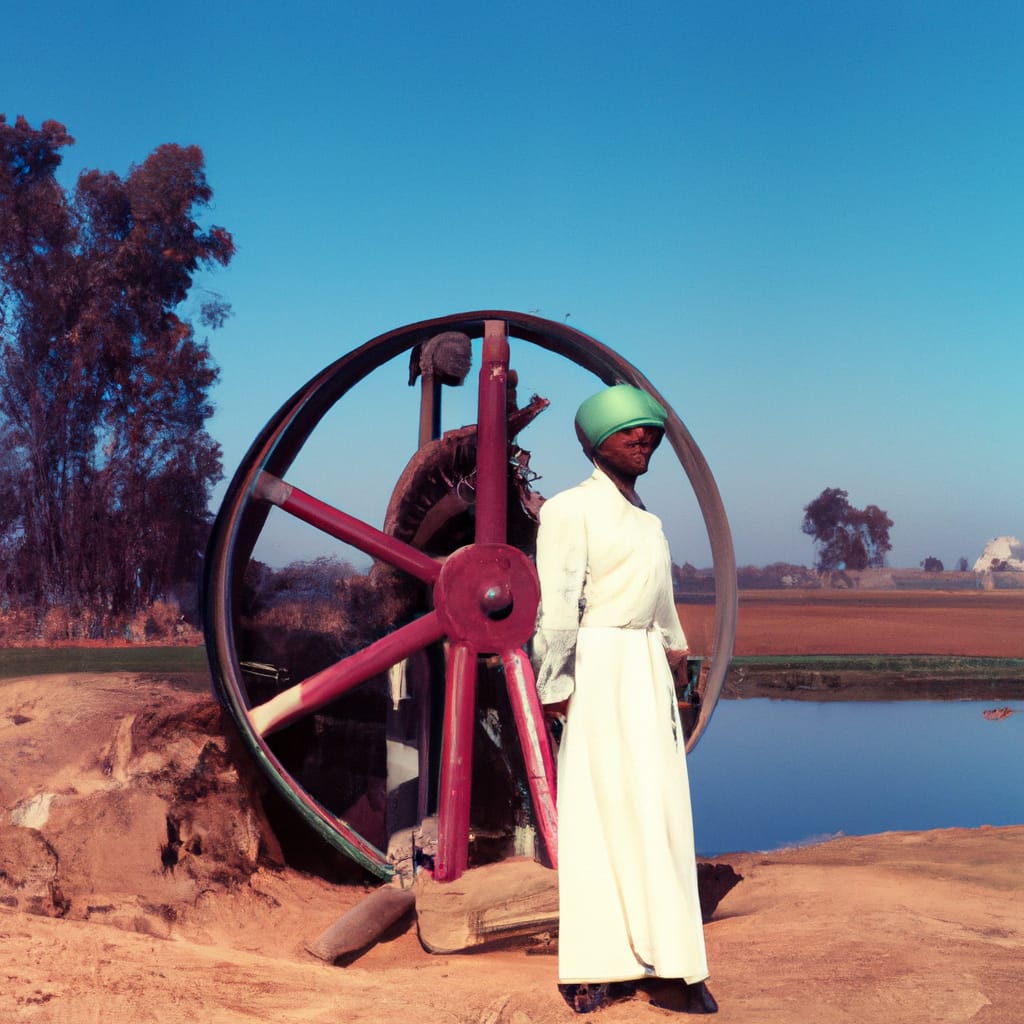

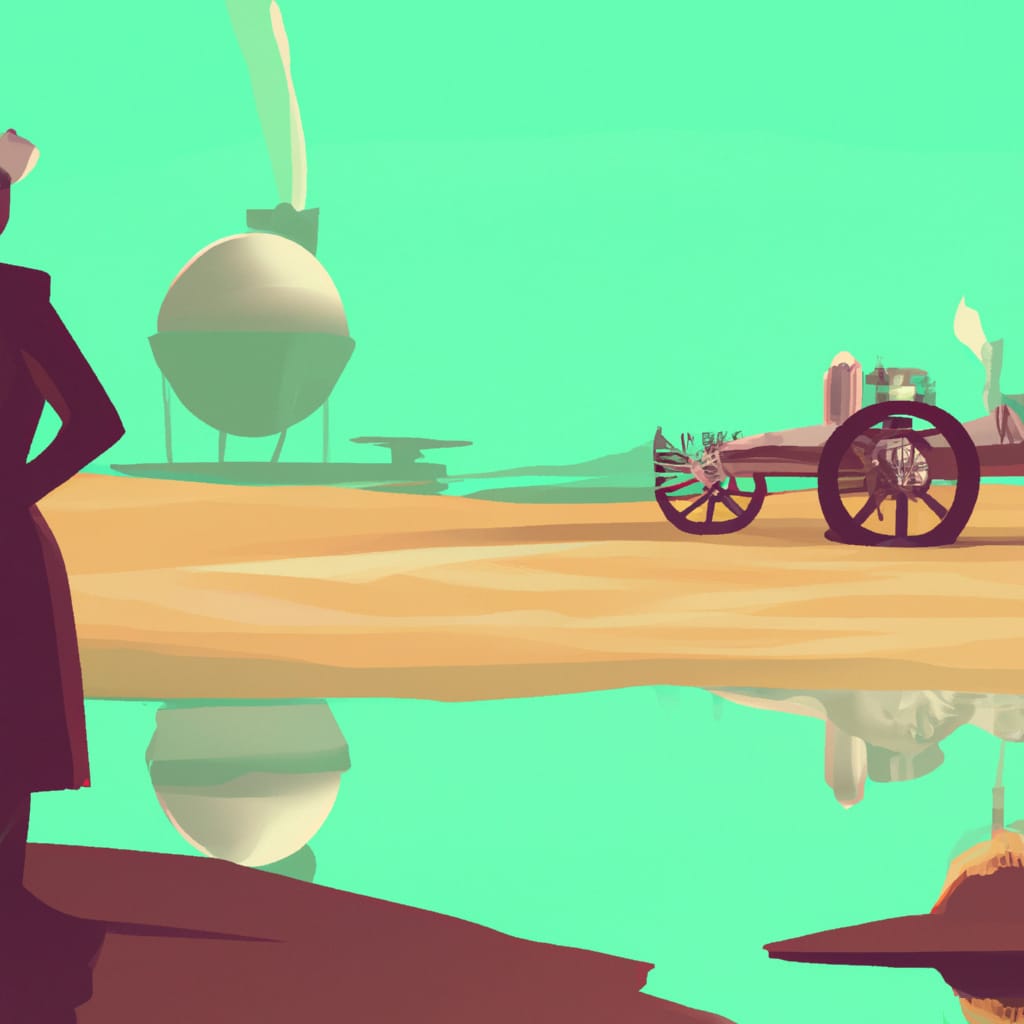

In recent years, there have been several examples of AI image generators producing stereotypical or offensive depictions of people from the Middle East, such as automatically adding headscarves to images of women or darkening skin tones. These examples suggest that the Orientalist biases of the training data are being reflected in the output of the AI generators.

It is therefore important for the creators and users of AI image generators to be aware of the potential for bias and actively work to diversify and de-bias the training data. This includes images produced by artists and photographers from the Middle East themselves and avoiding using images that reinforce Orientalist stereotypes.